Approach

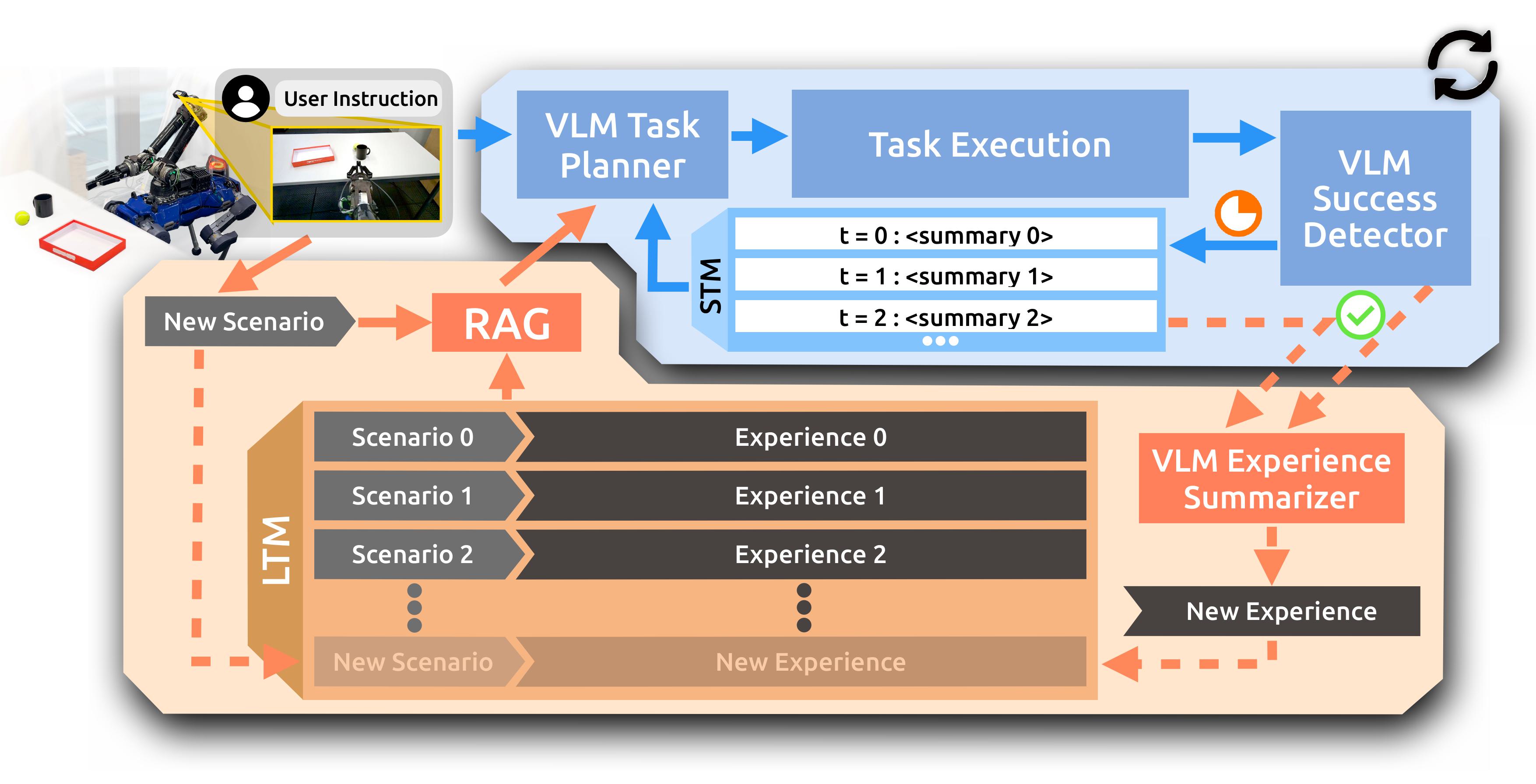

At the start of each task, the system takes the user instruction \( \mathbf{I} \) and egocentric observation \( \mathbf{o}_{0} \), which the VLM summarizes into a scenario. RAG retrieves relevant experiences from long-term memory \( \mathbf{M} \) and, together with the instruction and observation, feeds them into the VLM task planner \( \mathcal{T} \). After execution, success is checked by the VLM. If the task is not completed, the action \( \mathbf{a} \) and its feedback \( \mathbf{r} \) are accumulated into short-term memory \( \mathbf{m} \) and fed back into planning. Once the task is completed, the short-term memory \( \mathbf{m} \) is summarized and stored in long-term memory \( \mathbf{M} \) for future use.